mirror of

https://github.com/dino/dino.git

synced 2025-07-05 00:03:04 -04:00

Compare commits

No commits in common. "master" and "v0.3.0" have entirely different histories.

17

.github/matchers/gcc-problem-matcher.json

vendored

17

.github/matchers/gcc-problem-matcher.json

vendored

@ -1,17 +0,0 @@

|

||||

{

|

||||

"problemMatcher": [

|

||||

{

|

||||

"owner": "gcc-problem-matcher",

|

||||

"pattern": [

|

||||

{

|

||||

"regexp": "^(.*?):(\\d+):(\\d*):?\\s+(?:fatal\\s+)?(warning|error):\\s+(.*)$",

|

||||

"file": 1,

|

||||

"line": 2,

|

||||

"column": 3,

|

||||

"severity": 4,

|

||||

"message": 5

|

||||

}

|

||||

]

|

||||

}

|

||||

]

|

||||

}

|

||||

17

.github/matchers/meson-problem-matcher.json

vendored

17

.github/matchers/meson-problem-matcher.json

vendored

@ -1,17 +0,0 @@

|

||||

{

|

||||

"problemMatcher": [

|

||||

{

|

||||

"owner": "meson-problem-matcher",

|

||||

"pattern": [

|

||||

{

|

||||

"regexp": "^(.*?)?:(\\d+)?:(\\d+)?: (WARNING|ERROR):\\s+(.*)$",

|

||||

"file": 1,

|

||||

"line": 2,

|

||||

"column": 3,

|

||||

"severity": 4,

|

||||

"message": 5

|

||||

}

|

||||

]

|

||||

}

|

||||

]

|

||||

}

|

||||

17

.github/matchers/vala-problem-matcher.json

vendored

17

.github/matchers/vala-problem-matcher.json

vendored

@ -1,17 +0,0 @@

|

||||

{

|

||||

"problemMatcher": [

|

||||

{

|

||||

"owner": "vala-problem-matcher",

|

||||

"pattern": [

|

||||

{

|

||||

"regexp": "^(?:../)?(.*?):(\\d+).(\\d+)-\\d+.\\d+:?\\s+(?:fatal\\s+)?(warning|error):\\s+(.*)$",

|

||||

"file": 1,

|

||||

"line": 2,

|

||||

"column": 3,

|

||||

"severity": 4,

|

||||

"message": 5

|

||||

}

|

||||

]

|

||||

}

|

||||

]

|

||||

}

|

||||

46

.github/workflows/build.yml

vendored

46

.github/workflows/build.yml

vendored

@ -2,42 +2,12 @@ name: Build

|

||||

on: [pull_request, push]

|

||||

jobs:

|

||||

build:

|

||||

name: "Build"

|

||||

runs-on: ubuntu-24.04

|

||||

runs-on: ubuntu-20.04

|

||||

steps:

|

||||

- name: "Checkout sources"

|

||||

uses: actions/checkout@v4

|

||||

with:

|

||||

fetch-depth: 0

|

||||

- name: "Setup matchers"

|

||||

run: |

|

||||

echo '::add-matcher::${{ github.workspace }}/.github/matchers/gcc-problem-matcher.json'

|

||||

echo '::add-matcher::${{ github.workspace }}/.github/matchers/vala-problem-matcher.json'

|

||||

echo '::add-matcher::${{ github.workspace }}/.github/matchers/meson-problem-matcher.json'

|

||||

- name: "Setup dependencies"

|

||||

run: |

|

||||

sudo apt-get update

|

||||

sudo apt-get remove libunwind-14-dev

|

||||

sudo apt-get install -y build-essential gettext libadwaita-1-dev libcanberra-dev libgcrypt20-dev libgee-0.8-dev libgpgme-dev libgstreamer-plugins-base1.0-dev libgstreamer1.0-dev libgtk-4-dev libnice-dev libnotify-dev libqrencode-dev libsignal-protocol-c-dev libsoup-3.0-dev libsqlite3-dev libsrtp2-dev libwebrtc-audio-processing-dev meson valac

|

||||

- name: "Configure"

|

||||

run: meson setup build

|

||||

- name: "Build"

|

||||

run: meson compile -C build

|

||||

- name: "Test"

|

||||

run: meson test -C build

|

||||

build-flatpak:

|

||||

name: "Build flatpak"

|

||||

runs-on: ubuntu-24.04

|

||||

container:

|

||||

image: bilelmoussaoui/flatpak-github-actions:gnome-46

|

||||

options: --privileged

|

||||

steps:

|

||||

- name: "Checkout sources"

|

||||

uses: actions/checkout@v4

|

||||

with:

|

||||

fetch-depth: 0

|

||||

- name: "Build"

|

||||

uses: flathub-infra/flatpak-github-actions/flatpak-builder@master

|

||||

with:

|

||||

manifest-path: im.dino.Dino.json

|

||||

bundle: im.dino.Dino.flatpak

|

||||

- uses: actions/checkout@v2

|

||||

- run: sudo apt-get update

|

||||

- run: sudo apt-get install -y build-essential gettext cmake valac libgee-0.8-dev libsqlite3-dev libgtk-3-dev libnotify-dev libgpgme-dev libsoup2.4-dev libgcrypt20-dev libqrencode-dev libgspell-1-dev libnice-dev libgstreamer1.0-dev libgstreamer-plugins-base1.0-dev libsrtp2-dev libwebrtc-audio-processing-dev

|

||||

- run: ./configure --with-tests --with-libsignal-in-tree

|

||||

- run: make

|

||||

- run: build/xmpp-vala-test

|

||||

- run: build/signal-protocol-vala-test

|

||||

|

||||

4

.gitmodules

vendored

Normal file

4

.gitmodules

vendored

Normal file

@ -0,0 +1,4 @@

|

||||

[submodule "libsignal-protocol-c"]

|

||||

path = plugins/signal-protocol/libsignal-protocol-c

|

||||

url = https://github.com/WhisperSystems/libsignal-protocol-c.git

|

||||

branch = v2.3.3

|

||||

212

CMakeLists.txt

Normal file

212

CMakeLists.txt

Normal file

@ -0,0 +1,212 @@

|

||||

cmake_minimum_required(VERSION 3.3)

|

||||

list(APPEND CMAKE_MODULE_PATH ${CMAKE_SOURCE_DIR}/cmake)

|

||||

include(ComputeVersion)

|

||||

if (NOT VERSION_FOUND)

|

||||

project(Dino LANGUAGES C CXX)

|

||||

elseif (VERSION_IS_RELEASE)

|

||||

project(Dino VERSION ${VERSION_FULL} LANGUAGES C CXX)

|

||||

else ()

|

||||

project(Dino LANGUAGES C CXX)

|

||||

set(PROJECT_VERSION ${VERSION_FULL})

|

||||

endif ()

|

||||

|

||||

# Prepare Plugins

|

||||

set(DEFAULT_PLUGINS omemo;openpgp;http-files;ice;rtp)

|

||||

foreach (plugin ${DEFAULT_PLUGINS})

|

||||

if ("$CACHE{DINO_PLUGIN_ENABLED_${plugin}}" STREQUAL "")

|

||||

if (NOT DEFINED DINO_PLUGIN_ENABLED_${plugin}})

|

||||

set(DINO_PLUGIN_ENABLED_${plugin} "yes" CACHE BOOL "Enable plugin ${plugin}")

|

||||

else ()

|

||||

set(DINO_PLUGIN_ENABLED_${plugin} "${DINO_PLUGIN_ENABLED_${plugin}}" CACHE BOOL "Enable plugin ${plugin}" FORCE)

|

||||

endif ()

|

||||

if (DINO_PLUGIN_ENABLED_${plugin})

|

||||

message(STATUS "Enabled plugin: ${plugin}")

|

||||

else ()

|

||||

message(STATUS "Disabled plugin: ${plugin}")

|

||||

endif ()

|

||||

endif ()

|

||||

endforeach (plugin)

|

||||

|

||||

if (DISABLED_PLUGINS)

|

||||

foreach(plugin ${DISABLED_PLUGINS})

|

||||

set(DINO_PLUGIN_ENABLED_${plugin} "no" CACHE BOOL "Enable plugin ${plugin}" FORCE)

|

||||

message(STATUS "Disabled plugin: ${plugin}")

|

||||

endforeach(plugin)

|

||||

endif (DISABLED_PLUGINS)

|

||||

|

||||

if (ENABLED_PLUGINS)

|

||||

foreach(plugin ${ENABLED_PLUGINS})

|

||||

set(DINO_PLUGIN_ENABLED_${plugin} "yes" CACHE BOOL "Enable plugin ${plugin}" FORCE)

|

||||

message(STATUS "Enabled plugin: ${plugin}")

|

||||

endforeach(plugin)

|

||||

endif (ENABLED_PLUGINS)

|

||||

|

||||

set(PLUGINS "")

|

||||

get_cmake_property(all_variables VARIABLES)

|

||||

foreach (variable_name ${all_variables})

|

||||

if (variable_name MATCHES "^DINO_PLUGIN_ENABLED_(.+)$" AND ${variable_name})

|

||||

list(APPEND PLUGINS ${CMAKE_MATCH_1})

|

||||

endif()

|

||||

endforeach ()

|

||||

list(SORT PLUGINS)

|

||||

string(REPLACE ";" ", " PLUGINS_TEXT "${PLUGINS}")

|

||||

|

||||

message(STATUS "Configuring Dino ${PROJECT_VERSION} with plugins: ${PLUGINS_TEXT}")

|

||||

|

||||

# Prepare instal paths

|

||||

macro(set_path what val desc)

|

||||

if (NOT ${what})

|

||||

unset(${what} CACHE)

|

||||

set(${what} ${val})

|

||||

endif ()

|

||||

if (NOT "${${what}}" STREQUAL "${_${what}_SET}")

|

||||

message(STATUS "${desc}: ${${what}}")

|

||||

set(_${what}_SET ${${what}} CACHE INTERNAL ${desc})

|

||||

endif()

|

||||

endmacro(set_path)

|

||||

|

||||

string(REGEX REPLACE "^liblib" "lib" LIBDIR_NAME "lib${LIB_SUFFIX}")

|

||||

set_path(CMAKE_INSTALL_PREFIX "${CMAKE_INSTALL_PREFIX}" "Installation directory for architecture-independent files")

|

||||

set_path(EXEC_INSTALL_PREFIX "${CMAKE_INSTALL_PREFIX}" "Installation directory for architecture-dependent files")

|

||||

set_path(SHARE_INSTALL_PREFIX "${CMAKE_INSTALL_PREFIX}/share" "Installation directory for read-only architecture-independent data")

|

||||

|

||||

set_path(BIN_INSTALL_DIR "${EXEC_INSTALL_PREFIX}/bin" "Installation directory for user executables")

|

||||

set_path(DATA_INSTALL_DIR "${SHARE_INSTALL_PREFIX}/dino" "Installation directory for dino-specific data")

|

||||

set_path(APPDATA_FILE_INSTALL_DIR "${SHARE_INSTALL_PREFIX}/metainfo" "Installation directory for .appdata.xml files")

|

||||

set_path(DESKTOP_FILE_INSTALL_DIR "${SHARE_INSTALL_PREFIX}/applications" "Installation directory for .desktop files")

|

||||

set_path(SERVICE_FILE_INSTALL_DIR "${SHARE_INSTALL_PREFIX}/dbus-1/services" "Installation directory for .service files")

|

||||

set_path(ICON_INSTALL_DIR "${SHARE_INSTALL_PREFIX}/icons" "Installation directory for icons")

|

||||

set_path(INCLUDE_INSTALL_DIR "${EXEC_INSTALL_PREFIX}/include" "Installation directory for C header files")

|

||||

set_path(LIB_INSTALL_DIR "${EXEC_INSTALL_PREFIX}/${LIBDIR_NAME}" "Installation directory for object code libraries")

|

||||

set_path(LOCALE_INSTALL_DIR "${SHARE_INSTALL_PREFIX}/locale" "Installation directory for locale files")

|

||||

set_path(PLUGIN_INSTALL_DIR "${LIB_INSTALL_DIR}/dino/plugins" "Installation directory for dino plugin object code files")

|

||||

set_path(VAPI_INSTALL_DIR "${SHARE_INSTALL_PREFIX}/vala/vapi" "Installation directory for Vala API files")

|

||||

|

||||

set(TARGET_INSTALL LIBRARY DESTINATION ${LIB_INSTALL_DIR} RUNTIME DESTINATION ${BIN_INSTALL_DIR} PUBLIC_HEADER DESTINATION ${INCLUDE_INSTALL_DIR} ARCHIVE DESTINATION ${LIB_INSTALL_DIR})

|

||||

set(PLUGIN_INSTALL LIBRARY DESTINATION ${PLUGIN_INSTALL_DIR} RUNTIME DESTINATION ${PLUGIN_INSTALL_DIR})

|

||||

|

||||

include(CheckCCompilerFlag)

|

||||

include(CheckCSourceCompiles)

|

||||

|

||||

macro(AddCFlagIfSupported list flag)

|

||||

string(REGEX REPLACE "[^a-z^A-Z^_^0-9]+" "_" flag_name ${flag})

|

||||

check_c_compiler_flag(${flag} COMPILER_SUPPORTS${flag_name})

|

||||

if (${COMPILER_SUPPORTS${flag_name}})

|

||||

set(${list} "${${list}} ${flag}")

|

||||

endif ()

|

||||

endmacro()

|

||||

|

||||

|

||||

if ("Ninja" STREQUAL ${CMAKE_GENERATOR})

|

||||

AddCFlagIfSupported(CMAKE_C_FLAGS -fdiagnostics-color)

|

||||

endif ()

|

||||

|

||||

# Flags for all C files

|

||||

AddCFlagIfSupported(CMAKE_C_FLAGS -Wall)

|

||||

AddCFlagIfSupported(CMAKE_C_FLAGS -Wextra)

|

||||

AddCFlagIfSupported(CMAKE_C_FLAGS -Werror=format-security)

|

||||

AddCFlagIfSupported(CMAKE_C_FLAGS -Wno-duplicate-decl-specifier)

|

||||

AddCFlagIfSupported(CMAKE_C_FLAGS -fno-omit-frame-pointer)

|

||||

|

||||

if (NOT VALA_WARN)

|

||||

set(VALA_WARN "conversion")

|

||||

endif ()

|

||||

set(VALA_WARN "${VALA_WARN}" CACHE STRING "Which warnings to show when invoking C compiler on Vala compiler output")

|

||||

set_property(CACHE VALA_WARN PROPERTY STRINGS "all;unused;qualifier;conversion;deprecated;format;none")

|

||||

|

||||

# Vala generates some unused stuff

|

||||

if (NOT ("all" IN_LIST VALA_WARN OR "unused" IN_LIST VALA_WARN))

|

||||

AddCFlagIfSupported(VALA_CFLAGS -Wno-unused-but-set-variable)

|

||||

AddCFlagIfSupported(VALA_CFLAGS -Wno-unused-function)

|

||||

AddCFlagIfSupported(VALA_CFLAGS -Wno-unused-label)

|

||||

AddCFlagIfSupported(VALA_CFLAGS -Wno-unused-parameter)

|

||||

AddCFlagIfSupported(VALA_CFLAGS -Wno-unused-value)

|

||||

AddCFlagIfSupported(VALA_CFLAGS -Wno-unused-variable)

|

||||

endif ()

|

||||

|

||||

if (NOT ("all" IN_LIST VALA_WARN OR "qualifier" IN_LIST VALA_WARN))

|

||||

AddCFlagIfSupported(VALA_CFLAGS -Wno-discarded-qualifiers)

|

||||

AddCFlagIfSupported(VALA_CFLAGS -Wno-discarded-array-qualifiers)

|

||||

AddCFlagIfSupported(VALA_CFLAGS -Wno-incompatible-pointer-types-discards-qualifiers)

|

||||

endif ()

|

||||

|

||||

if (NOT ("all" IN_LIST VALA_WARN OR "deprecated" IN_LIST VALA_WARN))

|

||||

AddCFlagIfSupported(VALA_CFLAGS -Wno-deprecated-declarations)

|

||||

endif ()

|

||||

|

||||

if (NOT ("all" IN_LIST VALA_WARN OR "format" IN_LIST VALA_WARN))

|

||||

AddCFlagIfSupported(VALA_CFLAGS -Wno-missing-braces)

|

||||

endif ()

|

||||

|

||||

if (NOT ("all" IN_LIST VALA_WARN OR "conversion" IN_LIST VALA_WARN))

|

||||

AddCFlagIfSupported(VALA_CFLAGS -Wno-int-conversion)

|

||||

AddCFlagIfSupported(VALA_CFLAGS -Wno-pointer-sign)

|

||||

AddCFlagIfSupported(VALA_CFLAGS -Wno-incompatible-pointer-types)

|

||||

endif ()

|

||||

|

||||

try_compile(__WITHOUT_FILE_OFFSET_BITS_64 ${CMAKE_CURRENT_BINARY_DIR} ${CMAKE_SOURCE_DIR}/cmake/LargeFileOffsets.c COMPILE_DEFINITIONS ${CMAKE_REQUIRED_DEFINITIONS})

|

||||

if (NOT __WITHOUT_FILE_OFFSET_BITS_64)

|

||||

try_compile(__WITH_FILE_OFFSET_BITS_64 ${CMAKE_CURRENT_BINARY_DIR} ${CMAKE_SOURCE_DIR}/cmake/LargeFileOffsets.c COMPILE_DEFINITIONS ${CMAKE_REQUIRED_DEFINITIONS} -D_FILE_OFFSET_BITS=64)

|

||||

|

||||

if (__WITH_FILE_OFFSET_BITS_64)

|

||||

AddCFlagIfSupported(CMAKE_C_FLAGS -D_FILE_OFFSET_BITS=64)

|

||||

message(STATUS "Enabled large file support using _FILE_OFFSET_BITS=64")

|

||||

else (__WITH_FILE_OFFSET_BITS_64)

|

||||

message(STATUS "Large file support not available")

|

||||

endif (__WITH_FILE_OFFSET_BITS_64)

|

||||

unset(__WITH_FILE_OFFSET_BITS_64)

|

||||

endif (NOT __WITHOUT_FILE_OFFSET_BITS_64)

|

||||

unset(__WITHOUT_FILE_OFFSET_BITS_64)

|

||||

|

||||

if ($ENV{USE_CCACHE})

|

||||

# Configure CCache if available

|

||||

find_program(CCACHE_BIN ccache)

|

||||

mark_as_advanced(CCACHE_BIN)

|

||||

if (CCACHE_BIN)

|

||||

message(STATUS "Using ccache")

|

||||

set_property(GLOBAL PROPERTY RULE_LAUNCH_COMPILE ${CCACHE_BIN})

|

||||

set_property(GLOBAL PROPERTY RULE_LAUNCH_LINK ${CCACHE_BIN})

|

||||

else (CCACHE_BIN)

|

||||

message(STATUS "USE_CCACHE was set but ccache was not found")

|

||||

endif (CCACHE_BIN)

|

||||

endif ($ENV{USE_CCACHE})

|

||||

|

||||

if (NOT NO_DEBUG)

|

||||

set(CMAKE_C_FLAGS "${CMAKE_C_FLAGS} -g")

|

||||

set(CMAKE_VALA_FLAGS "${CMAKE_VALA_FLAGS} -g")

|

||||

endif (NOT NO_DEBUG)

|

||||

|

||||

set(CMAKE_RUNTIME_OUTPUT_DIRECTORY ${CMAKE_BINARY_DIR})

|

||||

set(CMAKE_LIBRARY_OUTPUT_DIRECTORY ${CMAKE_BINARY_DIR})

|

||||

|

||||

set(GTK3_GLOBAL_VERSION 3.22)

|

||||

set(GLib_GLOBAL_VERSION 2.38)

|

||||

set(ICU_GLOBAL_VERSION 57)

|

||||

|

||||

if (NOT VALA_EXECUTABLE)

|

||||

unset(VALA_EXECUTABLE CACHE)

|

||||

endif ()

|

||||

|

||||

find_package(Vala 0.34 REQUIRED)

|

||||

if (VALA_VERSION VERSION_GREATER "0.34.90" AND VALA_VERSION VERSION_LESS "0.36.1" OR # Due to a bug on 0.36.0 (and pre-releases), we need to disable FAST_VAPI

|

||||

VALA_VERSION VERSION_EQUAL "0.44.10" OR VALA_VERSION VERSION_EQUAL "0.46.4" OR VALA_VERSION VERSION_EQUAL "0.47.1" OR # See Dino issue #646

|

||||

VALA_VERSION VERSION_EQUAL "0.40.21" OR VALA_VERSION VERSION_EQUAL "0.46.8" OR VALA_VERSION VERSION_EQUAL "0.48.4") # See Dino issue #816

|

||||

set(DISABLE_FAST_VAPI yes)

|

||||

endif ()

|

||||

|

||||

include(${VALA_USE_FILE})

|

||||

include(MultiFind)

|

||||

include(GlibCompileResourcesSupport)

|

||||

|

||||

set(CMAKE_VALA_FLAGS "${CMAKE_VALA_FLAGS} --target-glib=${GLib_GLOBAL_VERSION}")

|

||||

|

||||

add_subdirectory(qlite)

|

||||

add_subdirectory(xmpp-vala)

|

||||

add_subdirectory(libdino)

|

||||

add_subdirectory(main)

|

||||

add_subdirectory(crypto-vala)

|

||||

add_subdirectory(plugins)

|

||||

|

||||

# uninstall target

|

||||

configure_file("${CMAKE_SOURCE_DIR}/cmake/cmake_uninstall.cmake.in" "${CMAKE_BINARY_DIR}/cmake_uninstall.cmake" IMMEDIATE @ONLY)

|

||||

add_custom_target(uninstall COMMAND ${CMAKE_COMMAND} -P ${CMAKE_BINARY_DIR}/cmake_uninstall.cmake COMMENT "Uninstall the project...")

|

||||

20

README.md

20

README.md

@ -1,11 +1,7 @@

|

||||

<img src="https://dino.im/img/logo.svg" width="80">

|

||||

|

||||

=======

|

||||

|

||||

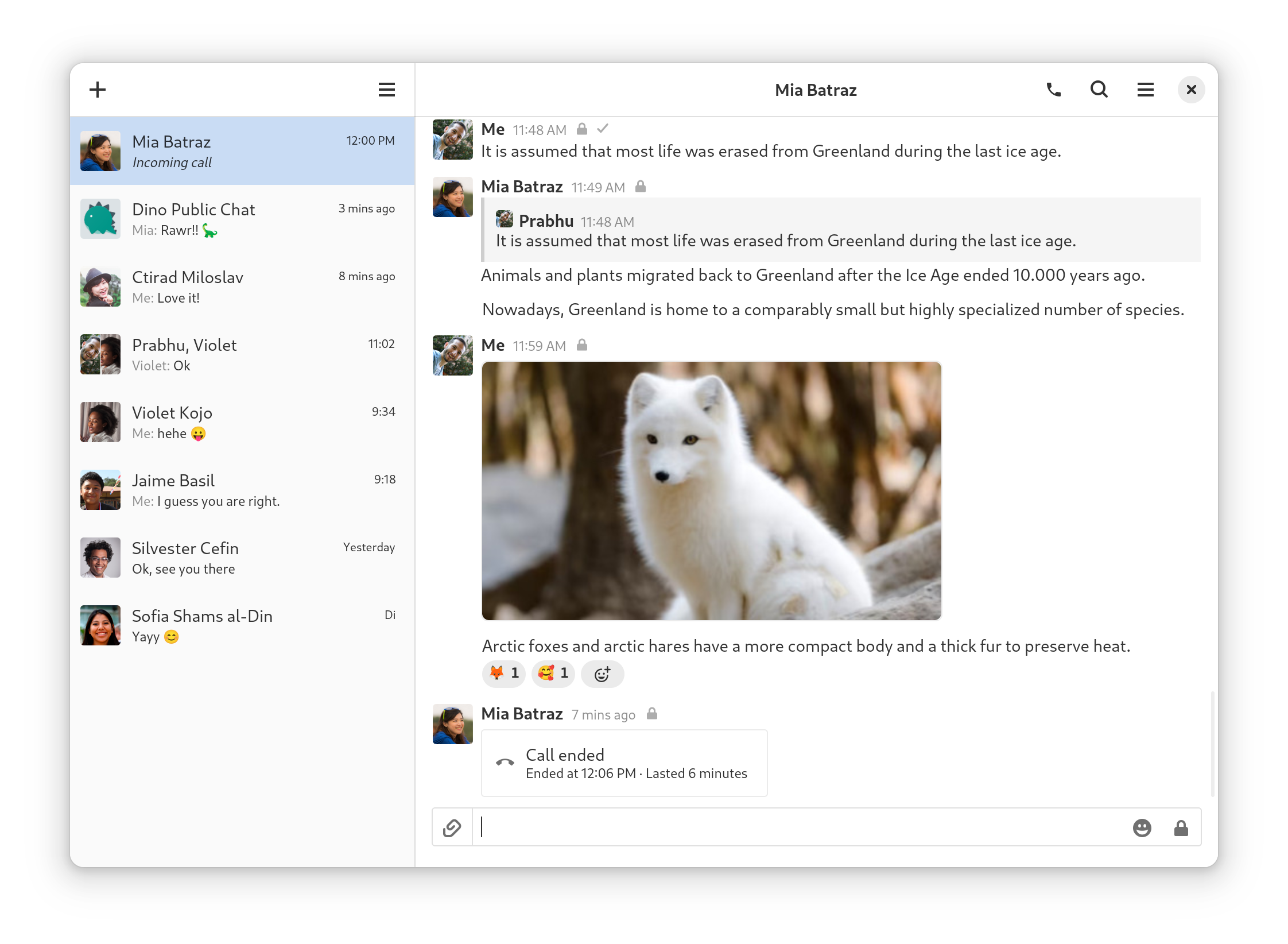

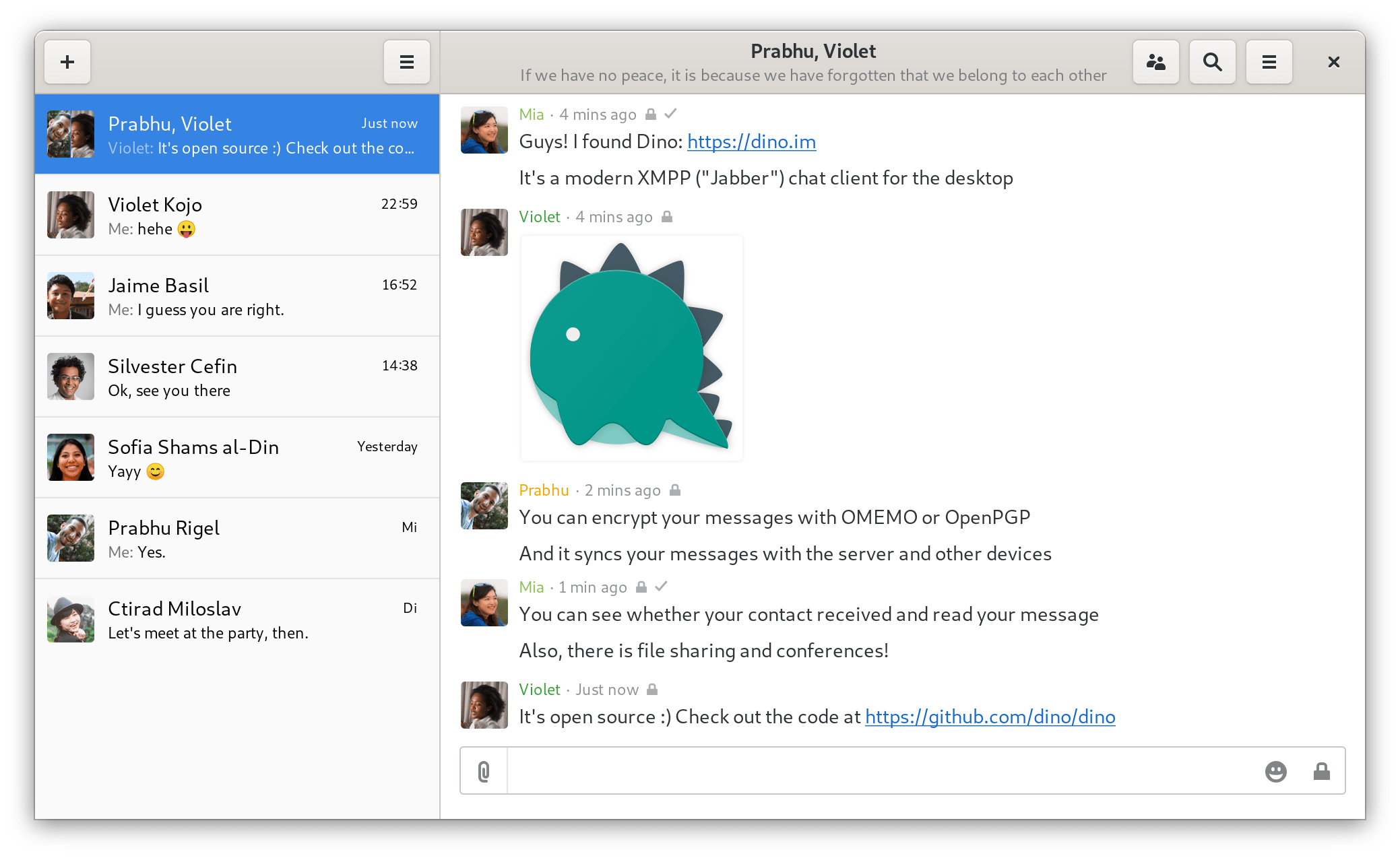

# Dino

|

||||

|

||||

Dino is an XMPP messaging app for Linux using GTK and Vala.

|

||||

It supports calls, encryption, file transfers, group chats and more.

|

||||

|

||||

|

||||

|

||||

|

||||

Installation

|

||||

------------

|

||||

@ -15,9 +11,9 @@ Build

|

||||

-----

|

||||

Make sure to install all [dependencies](https://github.com/dino/dino/wiki/Build#dependencies).

|

||||

|

||||

meson setup build

|

||||

meson compile -C build

|

||||

build/main/dino

|

||||

./configure

|

||||

make

|

||||

build/dino

|

||||

|

||||

Resources

|

||||

---------

|

||||

@ -34,8 +30,8 @@ Contribute

|

||||

|

||||

License

|

||||

-------

|

||||

Dino - XMPP messaging app using GTK/Vala

|

||||

Copyright (C) 2016-2025 Dino contributors

|

||||

Dino - Modern Jabber/XMPP Client using GTK+/Vala

|

||||

Copyright (C) 2016-2022 Dino contributors

|

||||

|

||||

This program is free software: you can redistribute it and/or modify

|

||||

it under the terms of the GNU General Public License as published by

|

||||

|

||||

57

cmake/BuildTargetScript.cmake

Normal file

57

cmake/BuildTargetScript.cmake

Normal file

@ -0,0 +1,57 @@

|

||||

# This file is used to be invoked at build time. It generates the needed

|

||||

# resource XML file.

|

||||

|

||||

# Input variables that need to provided when invoking this script:

|

||||

# GXML_OUTPUT The output file path where to save the XML file.

|

||||

# GXML_COMPRESS_ALL Sets all COMPRESS flags in all resources in resource

|

||||

# list.

|

||||

# GXML_NO_COMPRESS_ALL Removes all COMPRESS flags in all resources in

|

||||

# resource list.

|

||||

# GXML_STRIPBLANKS_ALL Sets all STRIPBLANKS flags in all resources in

|

||||

# resource list.

|

||||

# GXML_NO_STRIPBLANKS_ALL Removes all STRIPBLANKS flags in all resources in

|

||||

# resource list.

|

||||

# GXML_TOPIXDATA_ALL Sets all TOPIXDATA flags i nall resources in resource

|

||||

# list.

|

||||

# GXML_NO_TOPIXDATA_ALL Removes all TOPIXDATA flags in all resources in

|

||||

# resource list.

|

||||

# GXML_PREFIX Overrides the resource prefix that is prepended to

|

||||

# each relative name in registered resources.

|

||||

# GXML_RESOURCES The list of resource files. Whether absolute or

|

||||

# relative path is equal.

|

||||

|

||||

# Include the GENERATE_GXML() function.

|

||||

include(${CMAKE_CURRENT_LIST_DIR}/GenerateGXML.cmake)

|

||||

|

||||

# Set flags to actual invocation flags.

|

||||

if(GXML_COMPRESS_ALL)

|

||||

set(GXML_COMPRESS_ALL COMPRESS_ALL)

|

||||

endif()

|

||||

if(GXML_NO_COMPRESS_ALL)

|

||||

set(GXML_NO_COMPRESS_ALL NO_COMPRESS_ALL)

|

||||

endif()

|

||||

if(GXML_STRIPBLANKS_ALL)

|

||||

set(GXML_STRIPBLANKS_ALL STRIPBLANKS_ALL)

|

||||

endif()

|

||||

if(GXML_NO_STRIPBLANKS_ALL)

|

||||

set(GXML_NO_STRIPBLANKS_ALL NO_STRIPBLANKS_ALL)

|

||||

endif()

|

||||

if(GXML_TOPIXDATA_ALL)

|

||||

set(GXML_TOPIXDATA_ALL TOPIXDATA_ALL)

|

||||

endif()

|

||||

if(GXML_NO_TOPIXDATA_ALL)

|

||||

set(GXML_NO_TOPIXDATA_ALL NO_TOPIXDATA_ALL)

|

||||

endif()

|

||||

|

||||

# Replace " " with ";" to import the list over the command line. Otherwise

|

||||

# CMake would interprete the passed resources as a whole string.

|

||||

string(REPLACE " " ";" GXML_RESOURCES ${GXML_RESOURCES})

|

||||

|

||||

# Invoke the gresource XML generation function.

|

||||

generate_gxml(${GXML_OUTPUT}

|

||||

${GXML_COMPRESS_ALL} ${GXML_NO_COMPRESS_ALL}

|

||||

${GXML_STRIPBLANKS_ALL} ${GXML_NO_STRIPBLANKS_ALL}

|

||||

${GXML_TOPIXDATA_ALL} ${GXML_NO_TOPIXDATA_ALL}

|

||||

PREFIX ${GXML_PREFIX}

|

||||

RESOURCES ${GXML_RESOURCES})

|

||||

|

||||

221

cmake/CompileGResources.cmake

Normal file

221

cmake/CompileGResources.cmake

Normal file

@ -0,0 +1,221 @@

|

||||

include(CMakeParseArguments)

|

||||

|

||||

# Path to this file.

|

||||

set(GCR_CMAKE_MACRO_DIR ${CMAKE_CURRENT_LIST_DIR})

|

||||

|

||||

# Compiles a gresource resource file from given resource files. Automatically

|

||||

# creates the XML controlling file.

|

||||

# The type of resource to generate (header, c-file or bundle) is automatically

|

||||

# determined from TARGET file ending, if no TYPE is explicitly specified.

|

||||

# The output file is stored in the provided variable "output".

|

||||

# "xml_out" contains the variable where to output the XML path. Can be used to

|

||||

# create custom targets or doing postprocessing.

|

||||

# If you want to use preprocessing, you need to manually check the existence

|

||||

# of the tools you use. This function doesn't check this for you, it just

|

||||

# generates the XML file. glib-compile-resources will then throw a

|

||||

# warning/error.

|

||||

function(COMPILE_GRESOURCES output xml_out)

|

||||

# Available options:

|

||||

# COMPRESS_ALL, NO_COMPRESS_ALL Overrides the COMPRESS flag in all

|

||||

# registered resources.

|

||||

# STRIPBLANKS_ALL, NO_STRIPBLANKS_ALL Overrides the STRIPBLANKS flag in all

|

||||

# registered resources.

|

||||

# TOPIXDATA_ALL, NO_TOPIXDATA_ALL Overrides the TOPIXDATA flag in all

|

||||

# registered resources.

|

||||

set(CG_OPTIONS COMPRESS_ALL NO_COMPRESS_ALL

|

||||

STRIPBLANKS_ALL NO_STRIPBLANKS_ALL

|

||||

TOPIXDATA_ALL NO_TOPIXDATA_ALL)

|

||||

|

||||

# Available one value options:

|

||||

# TYPE Type of resource to create. Valid options are:

|

||||

# EMBED_C: A C-file that can be compiled with your project.

|

||||

# EMBED_H: A header that can be included into your project.

|

||||

# BUNDLE: Generates a resource bundle file that can be loaded

|

||||

# at runtime.

|

||||

# AUTO: Determine from target file ending. Need to specify

|

||||

# target argument.

|

||||

# PREFIX Overrides the resource prefix that is prepended to each

|

||||

# relative file name in registered resources.

|

||||

# SOURCE_DIR Overrides the resources base directory to search for resources.

|

||||

# Normally this is set to the source directory with that CMake

|

||||

# was invoked (CMAKE_SOURCE_DIR).

|

||||

# TARGET Overrides the name of the output file/-s. Normally the output

|

||||

# names from glib-compile-resources tool is taken.

|

||||

set(CG_ONEVALUEARGS TYPE PREFIX SOURCE_DIR TARGET)

|

||||

|

||||

# Available multi-value options:

|

||||

# RESOURCES The list of resource files. Whether absolute or relative path is

|

||||

# equal, absolute paths are stripped down to relative ones. If the

|

||||

# absolute path is not inside the given base directory SOURCE_DIR

|

||||

# or CMAKE_SOURCE_DIR (if SOURCE_DIR is not overriden), this

|

||||

# function aborts.

|

||||

# OPTIONS Extra command line options passed to glib-compile-resources.

|

||||

set(CG_MULTIVALUEARGS RESOURCES OPTIONS)

|

||||

|

||||

# Parse the arguments.

|

||||

cmake_parse_arguments(CG_ARG

|

||||

"${CG_OPTIONS}"

|

||||

"${CG_ONEVALUEARGS}"

|

||||

"${CG_MULTIVALUEARGS}"

|

||||

"${ARGN}")

|

||||

|

||||

# Variable to store the double-quote (") string. Since escaping

|

||||

# double-quotes in strings is not possible we need a helper variable that

|

||||

# does this job for us.

|

||||

set(Q \")

|

||||

|

||||

# Check invocation validity with the <prefix>_UNPARSED_ARGUMENTS variable.

|

||||

# If other not recognized parameters were passed, throw error.

|

||||

if (CG_ARG_UNPARSED_ARGUMENTS)

|

||||

set(CG_WARNMSG "Invocation of COMPILE_GRESOURCES with unrecognized")

|

||||

set(CG_WARNMSG "${CG_WARNMSG} parameters. Parameters are:")

|

||||

set(CG_WARNMSG "${CG_WARNMSG} ${CG_ARG_UNPARSED_ARGUMENTS}.")

|

||||

message(WARNING ${CG_WARNMSG})

|

||||

endif()

|

||||

|

||||

# Check invocation validity depending on generation mode (EMBED_C, EMBED_H

|

||||

# or BUNDLE).

|

||||

if ("${CG_ARG_TYPE}" STREQUAL "EMBED_C")

|

||||

# EMBED_C mode, output compilable C-file.

|

||||

set(CG_GENERATE_COMMAND_LINE "--generate-source")

|

||||

set(CG_TARGET_FILE_ENDING "c")

|

||||

elseif ("${CG_ARG_TYPE}" STREQUAL "EMBED_H")

|

||||

# EMBED_H mode, output includable header file.

|

||||

set(CG_GENERATE_COMMAND_LINE "--generate-header")

|

||||

set(CG_TARGET_FILE_ENDING "h")

|

||||

elseif ("${CG_ARG_TYPE}" STREQUAL "BUNDLE")

|

||||

# BUNDLE mode, output resource bundle. Don't do anything since

|

||||

# glib-compile-resources outputs a bundle when not specifying

|

||||

# something else.

|

||||

set(CG_TARGET_FILE_ENDING "gresource")

|

||||

else()

|

||||

# Everything else is AUTO mode, determine from target file ending.

|

||||

if (CG_ARG_TARGET)

|

||||

set(CG_GENERATE_COMMAND_LINE "--generate")

|

||||

else()

|

||||

set(CG_ERRMSG "AUTO mode given, but no target specified. Can't")

|

||||

set(CG_ERRMSG "${CG_ERRMSG} determine output type. In function")

|

||||

set(CG_ERRMSG "${CG_ERRMSG} COMPILE_GRESOURCES.")

|

||||

message(FATAL_ERROR ${CG_ERRMSG})

|

||||

endif()

|

||||

endif()

|

||||

|

||||

# Check flag validity.

|

||||

if (CG_ARG_COMPRESS_ALL AND CG_ARG_NO_COMPRESS_ALL)

|

||||

set(CG_ERRMSG "COMPRESS_ALL and NO_COMPRESS_ALL simultaneously set. In")

|

||||

set(CG_ERRMSG "${CG_ERRMSG} function COMPILE_GRESOURCES.")

|

||||

message(FATAL_ERROR ${CG_ERRMSG})

|

||||

endif()

|

||||

if (CG_ARG_STRIPBLANKS_ALL AND CG_ARG_NO_STRIPBLANKS_ALL)

|

||||

set(CG_ERRMSG "STRIPBLANKS_ALL and NO_STRIPBLANKS_ALL simultaneously")

|

||||

set(CG_ERRMSG "${CG_ERRMSG} set. In function COMPILE_GRESOURCES.")

|

||||

message(FATAL_ERROR ${CG_ERRMSG})

|

||||

endif()

|

||||

if (CG_ARG_TOPIXDATA_ALL AND CG_ARG_NO_TOPIXDATA_ALL)

|

||||

set(CG_ERRMSG "TOPIXDATA_ALL and NO_TOPIXDATA_ALL simultaneously set.")

|

||||

set(CG_ERRMSG "${CG_ERRMSG} In function COMPILE_GRESOURCES.")

|

||||

message(FATAL_ERROR ${CG_ERRMSG})

|

||||

endif()

|

||||

|

||||

# Check if there are any resources.

|

||||

if (NOT CG_ARG_RESOURCES)

|

||||

set(CG_ERRMSG "No resource files to process. In function")

|

||||

set(CG_ERRMSG "${CG_ERRMSG} COMPILE_GRESOURCES.")

|

||||

message(FATAL_ERROR ${CG_ERRMSG})

|

||||

endif()

|

||||

|

||||

# Extract all dependencies for targets from resource list.

|

||||

foreach(res ${CG_ARG_RESOURCES})

|

||||

if (NOT(("${res}" STREQUAL "COMPRESS") OR

|

||||

("${res}" STREQUAL "STRIPBLANKS") OR

|

||||

("${res}" STREQUAL "TOPIXDATA")))

|

||||

|

||||

add_custom_command(

|

||||

OUTPUT "${CMAKE_CURRENT_BINARY_DIR}/resources/${res}"

|

||||

COMMAND ${CMAKE_COMMAND} -E copy "${CG_ARG_SOURCE_DIR}/${res}" "${CMAKE_CURRENT_BINARY_DIR}/resources/${res}"

|

||||

MAIN_DEPENDENCY "${CG_ARG_SOURCE_DIR}/${res}")

|

||||

list(APPEND CG_RESOURCES_DEPENDENCIES "${CMAKE_CURRENT_BINARY_DIR}/resources/${res}")

|

||||

endif()

|

||||

endforeach()

|

||||

|

||||

|

||||

# Construct .gresource.xml path.

|

||||

set(CG_XML_FILE_PATH "${CMAKE_CURRENT_BINARY_DIR}/resources/.gresource.xml")

|

||||

|

||||

# Generate gresources XML target.

|

||||

list(APPEND CG_CMAKE_SCRIPT_ARGS "-D")

|

||||

list(APPEND CG_CMAKE_SCRIPT_ARGS "GXML_OUTPUT=${Q}${CG_XML_FILE_PATH}${Q}")

|

||||

if(CG_ARG_COMPRESS_ALL)

|

||||

list(APPEND CG_CMAKE_SCRIPT_ARGS "-D")

|

||||

list(APPEND CG_CMAKE_SCRIPT_ARGS "GXML_COMPRESS_ALL")

|

||||

endif()

|

||||

if(CG_ARG_NO_COMPRESS_ALL)

|

||||

list(APPEND CG_CMAKE_SCRIPT_ARGS "-D")

|

||||

list(APPEND CG_CMAKE_SCRIPT_ARGS "GXML_NO_COMPRESS_ALL")

|

||||

endif()

|

||||

if(CG_ARG_STRPIBLANKS_ALL)

|

||||

list(APPEND CG_CMAKE_SCRIPT_ARGS "-D")

|

||||

list(APPEND CG_CMAKE_SCRIPT_ARGS "GXML_STRIPBLANKS_ALL")

|

||||

endif()

|

||||

if(CG_ARG_NO_STRIPBLANKS_ALL)

|

||||

list(APPEND CG_CMAKE_SCRIPT_ARGS "-D")

|

||||

list(APPEND CG_CMAKE_SCRIPT_ARGS "GXML_NO_STRIPBLANKS_ALL")

|

||||

endif()

|

||||

if(CG_ARG_TOPIXDATA_ALL)

|

||||

list(APPEND CG_CMAKE_SCRIPT_ARGS "-D")

|

||||

list(APPEND CG_CMAKE_SCRIPT_ARGS "GXML_TOPIXDATA_ALL")

|

||||

endif()

|

||||

if(CG_ARG_NO_TOPIXDATA_ALL)

|

||||

list(APPEND CG_CMAKE_SCRIPT_ARGS "-D")

|

||||

list(APPEND CG_CMAKE_SCRIPT_ARGS "GXML_NO_TOPIXDATA_ALL")

|

||||

endif()

|

||||

list(APPEND CG_CMAKE_SCRIPT_ARGS "-D")

|

||||

list(APPEND CG_CMAKE_SCRIPT_ARGS "GXML_PREFIX=${Q}${CG_ARG_PREFIX}${Q}")

|

||||

list(APPEND CG_CMAKE_SCRIPT_ARGS "-D")

|

||||

list(APPEND CG_CMAKE_SCRIPT_ARGS

|

||||

"GXML_RESOURCES=${Q}${CG_ARG_RESOURCES}${Q}")

|

||||

list(APPEND CG_CMAKE_SCRIPT_ARGS "-P")

|

||||

list(APPEND CG_CMAKE_SCRIPT_ARGS

|

||||

"${Q}${GCR_CMAKE_MACRO_DIR}/BuildTargetScript.cmake${Q}")

|

||||

|

||||

get_filename_component(CG_XML_FILE_PATH_ONLY_NAME

|

||||

"${CG_XML_FILE_PATH}" NAME)

|

||||

set(CG_XML_CUSTOM_COMMAND_COMMENT

|

||||

"Creating gresources XML file (${CG_XML_FILE_PATH_ONLY_NAME})")

|

||||

add_custom_command(OUTPUT ${CG_XML_FILE_PATH}

|

||||

COMMAND ${CMAKE_COMMAND}

|

||||

ARGS ${CG_CMAKE_SCRIPT_ARGS}

|

||||

DEPENDS ${CG_RESOURCES_DEPENDENCIES}

|

||||

WORKING_DIRECTORY ${CMAKE_CURRENT_BINARY_DIR}

|

||||

COMMENT ${CG_XML_CUSTOM_COMMAND_COMMENT})

|

||||

|

||||

# Create target manually if not set (to make sure glib-compile-resources

|

||||

# doesn't change behaviour with it's naming standards).

|

||||

if (NOT CG_ARG_TARGET)

|

||||

set(CG_ARG_TARGET "${CMAKE_CURRENT_BINARY_DIR}/resources")

|

||||

set(CG_ARG_TARGET "${CG_ARG_TARGET}.${CG_TARGET_FILE_ENDING}")

|

||||

endif()

|

||||

|

||||

# Create source directory automatically if not set.

|

||||

if (NOT CG_ARG_SOURCE_DIR)

|

||||

set(CG_ARG_SOURCE_DIR "${CMAKE_SOURCE_DIR}")

|

||||

endif()

|

||||

|

||||

# Add compilation target for resources.

|

||||

add_custom_command(OUTPUT ${CG_ARG_TARGET}

|

||||

COMMAND ${GLIB_COMPILE_RESOURCES_EXECUTABLE}

|

||||

ARGS

|

||||

${OPTIONS}

|

||||

"--target=${Q}${CG_ARG_TARGET}${Q}"

|

||||

"--sourcedir=${Q}${CG_ARG_SOURCE_DIR}${Q}"

|

||||

${CG_GENERATE_COMMAND_LINE}

|

||||

${CG_XML_FILE_PATH}

|

||||

MAIN_DEPENDENCY ${CG_XML_FILE_PATH}

|

||||

DEPENDS ${CG_RESOURCES_DEPENDENCIES}

|

||||

WORKING_DIRECTORY ${CMAKE_BUILD_DIR})

|

||||

|

||||

# Set output and XML_OUT to parent scope.

|

||||

set(${xml_out} ${CG_XML_FILE_PATH} PARENT_SCOPE)

|

||||

set(${output} ${CG_ARG_TARGET} PARENT_SCOPE)

|

||||

|

||||

endfunction()

|

||||

105

cmake/ComputeVersion.cmake

Normal file

105

cmake/ComputeVersion.cmake

Normal file

@ -0,0 +1,105 @@

|

||||

include(CMakeParseArguments)

|

||||

|

||||

function(_compute_version_from_file)

|

||||

set_property(DIRECTORY APPEND PROPERTY CMAKE_CONFIGURE_DEPENDS ${CMAKE_SOURCE_DIR}/VERSION)

|

||||

if (NOT EXISTS ${CMAKE_SOURCE_DIR}/VERSION)

|

||||

set(VERSION_FOUND 0 PARENT_SCOPE)

|

||||

return()

|

||||

endif ()

|

||||

file(STRINGS ${CMAKE_SOURCE_DIR}/VERSION VERSION_FILE)

|

||||

string(REPLACE " " ";" VERSION_FILE "${VERSION_FILE}")

|

||||

cmake_parse_arguments(VERSION_FILE "" "RELEASE;PRERELEASE" "" ${VERSION_FILE})

|

||||

if (DEFINED VERSION_FILE_RELEASE)

|

||||

string(STRIP "${VERSION_FILE_RELEASE}" VERSION_FILE_RELEASE)

|

||||

set(VERSION_IS_RELEASE 1 PARENT_SCOPE)

|

||||

set(VERSION_FULL "${VERSION_FILE_RELEASE}" PARENT_SCOPE)

|

||||

set(VERSION_FOUND 1 PARENT_SCOPE)

|

||||

elseif (DEFINED VERSION_FILE_PRERELEASE)

|

||||

string(STRIP "${VERSION_FILE_PRERELEASE}" VERSION_FILE_PRERELEASE)

|

||||

set(VERSION_IS_RELEASE 0 PARENT_SCOPE)

|

||||

set(VERSION_FULL "${VERSION_FILE_PRERELEASE}" PARENT_SCOPE)

|

||||

set(VERSION_FOUND 1 PARENT_SCOPE)

|

||||

else ()

|

||||

set(VERSION_FOUND 0 PARENT_SCOPE)

|

||||

endif ()

|

||||

endfunction(_compute_version_from_file)

|

||||

|

||||

function(_compute_version_from_git)

|

||||

set_property(DIRECTORY APPEND PROPERTY CMAKE_CONFIGURE_DEPENDS ${CMAKE_SOURCE_DIR}/.git)

|

||||

if (NOT GIT_EXECUTABLE)

|

||||

find_package(Git QUIET)

|

||||

if (NOT GIT_FOUND)

|

||||

return()

|

||||

endif ()

|

||||

endif (NOT GIT_EXECUTABLE)

|

||||

|

||||

# Git tag

|

||||

execute_process(

|

||||

COMMAND "${GIT_EXECUTABLE}" describe --tags --abbrev=0

|

||||

WORKING_DIRECTORY "${CMAKE_SOURCE_DIR}"

|

||||

RESULT_VARIABLE git_result

|

||||

OUTPUT_VARIABLE git_tag

|

||||

ERROR_VARIABLE git_error

|

||||

OUTPUT_STRIP_TRAILING_WHITESPACE

|

||||

ERROR_STRIP_TRAILING_WHITESPACE

|

||||

)

|

||||

if (NOT git_result EQUAL 0)

|

||||

return()

|

||||

endif (NOT git_result EQUAL 0)

|

||||

|

||||

if (git_tag MATCHES "^v?([0-9]+[.]?[0-9]*[.]?[0-9]*)(-[.0-9A-Za-z-]+)?([+][.0-9A-Za-z-]+)?$")

|

||||

set(VERSION_LAST_RELEASE "${CMAKE_MATCH_1}")

|

||||

else ()

|

||||

return()

|

||||

endif ()

|

||||

|

||||

# Git describe

|

||||

execute_process(

|

||||

COMMAND "${GIT_EXECUTABLE}" describe --tags

|

||||

WORKING_DIRECTORY "${CMAKE_SOURCE_DIR}"

|

||||

RESULT_VARIABLE git_result

|

||||

OUTPUT_VARIABLE git_describe

|

||||

ERROR_VARIABLE git_error

|

||||

OUTPUT_STRIP_TRAILING_WHITESPACE

|

||||

ERROR_STRIP_TRAILING_WHITESPACE

|

||||

)

|

||||

if (NOT git_result EQUAL 0)

|

||||

return()

|

||||

endif (NOT git_result EQUAL 0)

|

||||

|

||||

if ("${git_tag}" STREQUAL "${git_describe}")

|

||||

set(VERSION_IS_RELEASE 1)

|

||||

else ()

|

||||

set(VERSION_IS_RELEASE 0)

|

||||

if (git_describe MATCHES "-([0-9]+)-g([0-9a-f]+)$")

|

||||

set(VERSION_TAG_OFFSET "${CMAKE_MATCH_1}")

|

||||

set(VERSION_COMMIT_HASH "${CMAKE_MATCH_2}")

|

||||

endif ()

|

||||

execute_process(

|

||||

COMMAND "${GIT_EXECUTABLE}" show --format=%cd --date=format:%Y%m%d -s

|

||||

WORKING_DIRECTORY "${CMAKE_SOURCE_DIR}"

|

||||

RESULT_VARIABLE git_result

|

||||

OUTPUT_VARIABLE git_time

|

||||

ERROR_VARIABLE git_error

|

||||

OUTPUT_STRIP_TRAILING_WHITESPACE

|

||||

ERROR_STRIP_TRAILING_WHITESPACE

|

||||

)

|

||||

if (NOT git_result EQUAL 0)

|

||||

return()

|

||||

endif (NOT git_result EQUAL 0)

|

||||

set(VERSION_COMMIT_DATE "${git_time}")

|

||||

endif ()

|

||||

if (NOT VERSION_IS_RELEASE)

|

||||

set(VERSION_SUFFIX "~git${VERSION_TAG_OFFSET}.${VERSION_COMMIT_DATE}.${VERSION_COMMIT_HASH}")

|

||||

else (NOT VERSION_IS_RELEASE)

|

||||

set(VERSION_SUFFIX "")

|

||||

endif (NOT VERSION_IS_RELEASE)

|

||||

set(VERSION_IS_RELEASE ${VERSION_IS_RELEASE} PARENT_SCOPE)

|

||||

set(VERSION_FULL "${VERSION_LAST_RELEASE}${VERSION_SUFFIX}" PARENT_SCOPE)

|

||||

set(VERSION_FOUND 1 PARENT_SCOPE)

|

||||

endfunction(_compute_version_from_git)

|

||||

|

||||

_compute_version_from_file()

|

||||

if (NOT VERSION_FOUND)

|

||||

_compute_version_from_git()

|

||||

endif (NOT VERSION_FOUND)

|

||||

31

cmake/FindATK.cmake

Normal file

31

cmake/FindATK.cmake

Normal file

@ -0,0 +1,31 @@

|

||||

include(PkgConfigWithFallback)

|

||||

find_pkg_config_with_fallback(ATK

|

||||

PKG_CONFIG_NAME atk

|

||||

LIB_NAMES atk-1.0

|

||||

INCLUDE_NAMES atk/atk.h

|

||||

INCLUDE_DIR_SUFFIXES atk-1.0 atk-1.0/include

|

||||

DEPENDS GObject

|

||||

)

|

||||

|

||||

if(ATK_FOUND AND NOT ATK_VERSION)

|

||||

find_file(ATK_VERSION_HEADER "atk/atkversion.h" HINTS ${ATK_INCLUDE_DIRS})

|

||||

mark_as_advanced(ATK_VERSION_HEADER)

|

||||

|

||||

if(ATK_VERSION_HEADER)

|

||||

file(STRINGS "${ATK_VERSION_HEADER}" ATK_MAJOR_VERSION REGEX "^#define ATK_MAJOR_VERSION +\\(?([0-9]+)\\)?$")

|

||||

string(REGEX REPLACE "^#define ATK_MAJOR_VERSION \\(?([0-9]+)\\)?$" "\\1" ATK_MAJOR_VERSION "${ATK_MAJOR_VERSION}")

|

||||

file(STRINGS "${ATK_VERSION_HEADER}" ATK_MINOR_VERSION REGEX "^#define ATK_MINOR_VERSION +\\(?([0-9]+)\\)?$")

|

||||

string(REGEX REPLACE "^#define ATK_MINOR_VERSION \\(?([0-9]+)\\)?$" "\\1" ATK_MINOR_VERSION "${ATK_MINOR_VERSION}")

|

||||

file(STRINGS "${ATK_VERSION_HEADER}" ATK_MICRO_VERSION REGEX "^#define ATK_MICRO_VERSION +\\(?([0-9]+)\\)?$")

|

||||

string(REGEX REPLACE "^#define ATK_MICRO_VERSION \\(?([0-9]+)\\)?$" "\\1" ATK_MICRO_VERSION "${ATK_MICRO_VERSION}")

|

||||

set(ATK_VERSION "${ATK_MAJOR_VERSION}.${ATK_MINOR_VERSION}.${ATK_MICRO_VERSION}")

|

||||

unset(ATK_MAJOR_VERSION)

|

||||

unset(ATK_MINOR_VERSION)

|

||||

unset(ATK_MICRO_VERSION)

|

||||

endif()

|

||||

endif()

|

||||

|

||||

include(FindPackageHandleStandardArgs)

|

||||

find_package_handle_standard_args(ATK

|

||||

REQUIRED_VARS ATK_LIBRARY

|

||||

VERSION_VAR ATK_VERSION)

|

||||

30

cmake/FindCairo.cmake

Normal file

30

cmake/FindCairo.cmake

Normal file

@ -0,0 +1,30 @@

|

||||

include(PkgConfigWithFallback)

|

||||

find_pkg_config_with_fallback(Cairo

|

||||

PKG_CONFIG_NAME cairo

|

||||

LIB_NAMES cairo

|

||||

INCLUDE_NAMES cairo.h

|

||||

INCLUDE_DIR_SUFFIXES cairo cairo/include

|

||||

)

|

||||

|

||||

if(Cairo_FOUND AND NOT Cairo_VERSION)

|

||||

find_file(Cairo_VERSION_HEADER "cairo-version.h" HINTS ${Cairo_INCLUDE_DIRS})

|

||||

mark_as_advanced(Cairo_VERSION_HEADER)

|

||||

|

||||

if(Cairo_VERSION_HEADER)

|

||||

file(STRINGS "${Cairo_VERSION_HEADER}" Cairo_MAJOR_VERSION REGEX "^#define CAIRO_VERSION_MAJOR +\\(?([0-9]+)\\)?$")

|

||||

string(REGEX REPLACE "^#define CAIRO_VERSION_MAJOR \\(?([0-9]+)\\)?$" "\\1" Cairo_MAJOR_VERSION "${Cairo_MAJOR_VERSION}")

|

||||

file(STRINGS "${Cairo_VERSION_HEADER}" Cairo_MINOR_VERSION REGEX "^#define CAIRO_VERSION_MINOR +\\(?([0-9]+)\\)?$")

|

||||

string(REGEX REPLACE "^#define CAIRO_VERSION_MINOR \\(?([0-9]+)\\)?$" "\\1" Cairo_MINOR_VERSION "${Cairo_MINOR_VERSION}")

|

||||

file(STRINGS "${Cairo_VERSION_HEADER}" Cairo_MICRO_VERSION REGEX "^#define CAIRO_VERSION_MICRO +\\(?([0-9]+)\\)?$")

|

||||

string(REGEX REPLACE "^#define CAIRO_VERSION_MICRO \\(?([0-9]+)\\)?$" "\\1" Cairo_MICRO_VERSION "${Cairo_MICRO_VERSION}")

|

||||

set(Cairo_VERSION "${Cairo_MAJOR_VERSION}.${Cairo_MINOR_VERSION}.${Cairo_MICRO_VERSION}")

|

||||

unset(Cairo_MAJOR_VERSION)

|

||||

unset(Cairo_MINOR_VERSION)

|

||||

unset(Cairo_MICRO_VERSION)

|

||||

endif()

|

||||

endif()

|

||||

|

||||

include(FindPackageHandleStandardArgs)

|

||||

find_package_handle_standard_args(Cairo

|

||||

REQUIRED_VARS Cairo_LIBRARY

|

||||

VERSION_VAR Cairo_VERSION)

|

||||

10

cmake/FindCanberra.cmake

Normal file

10

cmake/FindCanberra.cmake

Normal file

@ -0,0 +1,10 @@

|

||||

include(PkgConfigWithFallback)

|

||||

find_pkg_config_with_fallback(Canberra

|

||||

PKG_CONFIG_NAME libcanberra

|

||||

LIB_NAMES canberra

|

||||

INCLUDE_NAMES canberra.h

|

||||

)

|

||||

|

||||

include(FindPackageHandleStandardArgs)

|

||||

find_package_handle_standard_args(Canberra

|

||||

REQUIRED_VARS Canberra_LIBRARY)

|

||||

10

cmake/FindGCrypt.cmake

Normal file

10

cmake/FindGCrypt.cmake

Normal file

@ -0,0 +1,10 @@

|

||||

include(PkgConfigWithFallbackOnConfigScript)

|

||||

find_pkg_config_with_fallback_on_config_script(GCrypt

|

||||

PKG_CONFIG_NAME libgcrypt

|

||||

CONFIG_SCRIPT_NAME libgcrypt

|

||||

)

|

||||

|

||||

include(FindPackageHandleStandardArgs)

|

||||

find_package_handle_standard_args(GCrypt

|

||||

REQUIRED_VARS GCrypt_LIBRARY

|

||||

VERSION_VAR GCrypt_VERSION)

|

||||

38

cmake/FindGDK3.cmake

Normal file

38

cmake/FindGDK3.cmake

Normal file

@ -0,0 +1,38 @@

|

||||

include(PkgConfigWithFallback)

|

||||

find_pkg_config_with_fallback(GDK3

|

||||

PKG_CONFIG_NAME gdk-3.0

|

||||

LIB_NAMES gdk-3

|

||||

INCLUDE_NAMES gdk/gdk.h

|

||||

INCLUDE_DIR_SUFFIXES gtk-3.0 gtk-3.0/include gtk+-3.0 gtk+-3.0/include

|

||||

DEPENDS Pango Cairo GDKPixbuf2

|

||||

)

|

||||

|

||||

if(GDK3_FOUND AND NOT GDK3_VERSION)

|

||||

find_file(GDK3_VERSION_HEADER "gdk/gdkversionmacros.h" HINTS ${GDK3_INCLUDE_DIRS})

|

||||

mark_as_advanced(GDK3_VERSION_HEADER)

|

||||

|

||||

if(GDK3_VERSION_HEADER)

|

||||

file(STRINGS "${GDK3_VERSION_HEADER}" GDK3_MAJOR_VERSION REGEX "^#define GDK_MAJOR_VERSION +\\(?([0-9]+)\\)?$")

|

||||

string(REGEX REPLACE "^#define GDK_MAJOR_VERSION \\(?([0-9]+)\\)?$" "\\1" GDK3_MAJOR_VERSION "${GDK3_MAJOR_VERSION}")

|

||||

file(STRINGS "${GDK3_VERSION_HEADER}" GDK3_MINOR_VERSION REGEX "^#define GDK_MINOR_VERSION +\\(?([0-9]+)\\)?$")

|

||||

string(REGEX REPLACE "^#define GDK_MINOR_VERSION \\(?([0-9]+)\\)?$" "\\1" GDK3_MINOR_VERSION "${GDK3_MINOR_VERSION}")

|

||||

file(STRINGS "${GDK3_VERSION_HEADER}" GDK3_MICRO_VERSION REGEX "^#define GDK_MICRO_VERSION +\\(?([0-9]+)\\)?$")

|

||||

string(REGEX REPLACE "^#define GDK_MICRO_VERSION \\(?([0-9]+)\\)?$" "\\1" GDK3_MICRO_VERSION "${GDK3_MICRO_VERSION}")

|

||||

set(GDK3_VERSION "${GDK3_MAJOR_VERSION}.${GDK3_MINOR_VERSION}.${GDK3_MICRO_VERSION}")

|

||||

unset(GDK3_MAJOR_VERSION)

|

||||

unset(GDK3_MINOR_VERSION)

|

||||

unset(GDK3_MICRO_VERSION)

|

||||

endif()

|

||||

endif()

|

||||

|

||||

if (GDK3_FOUND)

|

||||

find_file(GDK3_WITH_X11 "gdk/gdkx.h" HINTS ${GDK3_INCLUDE_DIRS})

|

||||

if (GDK3_WITH_X11)

|

||||

set(GDK3_WITH_X11 yes CACHE INTERNAL "Does GDK3 support X11")

|

||||

endif (GDK3_WITH_X11)

|

||||

endif ()

|

||||

|

||||

include(FindPackageHandleStandardArgs)

|

||||

find_package_handle_standard_args(GDK3

|

||||

REQUIRED_VARS GDK3_LIBRARY

|

||||

VERSION_VAR GDK3_VERSION)

|

||||

23

cmake/FindGDKPixbuf2.cmake

Normal file

23

cmake/FindGDKPixbuf2.cmake

Normal file

@ -0,0 +1,23 @@

|

||||

include(PkgConfigWithFallback)

|

||||

find_pkg_config_with_fallback(GDKPixbuf2

|

||||

PKG_CONFIG_NAME gdk-pixbuf-2.0

|

||||

LIB_NAMES gdk_pixbuf-2.0

|

||||

INCLUDE_NAMES gdk-pixbuf/gdk-pixbuf.h

|

||||

INCLUDE_DIR_SUFFIXES gdk-pixbuf-2.0 gdk-pixbuf-2.0/include

|

||||

DEPENDS GLib

|

||||

)

|

||||

|

||||

if(GDKPixbuf2_FOUND AND NOT GDKPixbuf2_VERSION)

|

||||

find_file(GDKPixbuf2_FEATURES_HEADER "gdk-pixbuf/gdk-pixbuf-features.h" HINTS ${GDKPixbuf2_INCLUDE_DIRS})

|

||||

mark_as_advanced(GDKPixbuf2_FEATURES_HEADER)

|

||||

|

||||

if(GDKPixbuf2_FEATURES_HEADER)

|

||||

file(STRINGS "${GDKPixbuf2_FEATURES_HEADER}" GDKPixbuf2_VERSION REGEX "^#define GDK_PIXBUF_VERSION \\\"[^\\\"]+\\\"")

|

||||

string(REGEX REPLACE "^#define GDK_PIXBUF_VERSION \\\"([0-9]+)\\.([0-9]+)\\.([0-9]+)\\\"$" "\\1.\\2.\\3" GDKPixbuf2_VERSION "${GDKPixbuf2_VERSION}")

|

||||

endif()

|

||||

endif()

|

||||

|

||||

include(FindPackageHandleStandardArgs)

|

||||

find_package_handle_standard_args(GDKPixbuf2

|

||||

REQUIRED_VARS GDKPixbuf2_LIBRARY

|

||||

VERSION_VAR GDKPixbuf2_VERSION)

|

||||

18

cmake/FindGIO.cmake

Normal file

18

cmake/FindGIO.cmake

Normal file

@ -0,0 +1,18 @@

|

||||

include(PkgConfigWithFallback)

|

||||

find_pkg_config_with_fallback(GIO

|

||||

PKG_CONFIG_NAME gio-2.0

|

||||

LIB_NAMES gio-2.0

|

||||

INCLUDE_NAMES gio/gio.h

|

||||

INCLUDE_DIR_SUFFIXES glib-2.0 glib-2.0/include

|

||||

DEPENDS GObject

|

||||

)

|

||||

|

||||

if(GIO_FOUND AND NOT GIO_VERSION)

|

||||

find_package(GLib ${GLib_GLOBAL_VERSION})

|

||||

set(GIO_VERSION ${GLib_VERSION})

|

||||

endif()

|

||||

|

||||

include(FindPackageHandleStandardArgs)

|

||||

find_package_handle_standard_args(GIO

|

||||

REQUIRED_VARS GIO_LIBRARY

|

||||

VERSION_VAR GIO_VERSION)

|

||||

32

cmake/FindGLib.cmake

Normal file

32

cmake/FindGLib.cmake

Normal file

@ -0,0 +1,32 @@

|

||||

include(PkgConfigWithFallback)

|

||||

find_pkg_config_with_fallback(GLib

|

||||

PKG_CONFIG_NAME glib-2.0

|

||||

LIB_NAMES glib-2.0

|

||||

INCLUDE_NAMES glib.h glibconfig.h

|

||||

INCLUDE_DIR_HINTS ${CMAKE_LIBRARY_PATH} ${CMAKE_SYSTEM_LIBRARY_PATH}

|

||||

INCLUDE_DIR_PATHS ${CMAKE_PREFIX_PATH}/lib64 ${CMAKE_PREFIX_PATH}/lib

|

||||

INCLUDE_DIR_SUFFIXES glib-2.0 glib-2.0/include

|

||||

)

|

||||

|

||||

if(GLib_FOUND AND NOT GLib_VERSION)

|

||||

find_file(GLib_CONFIG_HEADER "glibconfig.h" HINTS ${GLib_INCLUDE_DIRS})

|

||||

mark_as_advanced(GLib_CONFIG_HEADER)

|

||||

|

||||

if(GLib_CONFIG_HEADER)

|

||||

file(STRINGS "${GLib_CONFIG_HEADER}" GLib_MAJOR_VERSION REGEX "^#define GLIB_MAJOR_VERSION +([0-9]+)")

|

||||

string(REGEX REPLACE "^#define GLIB_MAJOR_VERSION ([0-9]+)$" "\\1" GLib_MAJOR_VERSION "${GLib_MAJOR_VERSION}")

|

||||

file(STRINGS "${GLib_CONFIG_HEADER}" GLib_MINOR_VERSION REGEX "^#define GLIB_MINOR_VERSION +([0-9]+)")

|

||||

string(REGEX REPLACE "^#define GLIB_MINOR_VERSION ([0-9]+)$" "\\1" GLib_MINOR_VERSION "${GLib_MINOR_VERSION}")

|

||||

file(STRINGS "${GLib_CONFIG_HEADER}" GLib_MICRO_VERSION REGEX "^#define GLIB_MICRO_VERSION +([0-9]+)")

|

||||

string(REGEX REPLACE "^#define GLIB_MICRO_VERSION ([0-9]+)$" "\\1" GLib_MICRO_VERSION "${GLib_MICRO_VERSION}")

|

||||

set(GLib_VERSION "${GLib_MAJOR_VERSION}.${GLib_MINOR_VERSION}.${GLib_MICRO_VERSION}")

|

||||

unset(GLib_MAJOR_VERSION)

|

||||

unset(GLib_MINOR_VERSION)

|

||||

unset(GLib_MICRO_VERSION)

|

||||

endif()

|

||||

endif()

|

||||

|

||||

include(FindPackageHandleStandardArgs)

|

||||

find_package_handle_standard_args(GLib

|

||||

REQUIRED_VARS GLib_LIBRARY

|

||||

VERSION_VAR GLib_VERSION)

|

||||

19

cmake/FindGModule.cmake

Normal file

19

cmake/FindGModule.cmake

Normal file

@ -0,0 +1,19 @@

|

||||

include(PkgConfigWithFallback)

|

||||

find_pkg_config_with_fallback(GModule

|

||||

PKG_CONFIG_NAME gmodule-2.0

|

||||

LIB_NAMES gmodule-2.0

|

||||

INCLUDE_NAMES gmodule.h

|

||||

INCLUDE_DIR_SUFFIXES glib-2.0 glib-2.0/include

|

||||

DEPENDS GLib

|

||||

)

|

||||

|

||||

if(GModule_FOUND AND NOT GModule_VERSION)

|

||||

# TODO

|

||||

find_package(GLib ${GLib_GLOBAL_VERSION})

|

||||

set(GModule_VERSION ${GLib_VERSION})

|

||||

endif()

|

||||

|

||||

include(FindPackageHandleStandardArgs)

|

||||

find_package_handle_standard_args(GModule

|

||||

REQUIRED_VARS GModule_LIBRARY

|

||||

VERSION_VAR GModule_VERSION)

|

||||

19

cmake/FindGObject.cmake

Normal file

19

cmake/FindGObject.cmake

Normal file

@ -0,0 +1,19 @@

|

||||

include(PkgConfigWithFallback)

|

||||

find_pkg_config_with_fallback(GObject

|

||||

PKG_CONFIG_NAME gobject-2.0

|

||||

LIB_NAMES gobject-2.0

|

||||

INCLUDE_NAMES gobject/gobject.h

|

||||

INCLUDE_DIR_SUFFIXES glib-2.0 glib-2.0/include

|

||||

DEPENDS GLib

|

||||

)

|

||||

|

||||

if(GObject_FOUND AND NOT GObject_VERSION)

|

||||

# TODO

|

||||

find_package(GLib ${GLib_GLOBAL_VERSION})

|

||||

set(GObject_VERSION ${GLib_VERSION})

|

||||

endif()

|

||||

|

||||

include(FindPackageHandleStandardArgs)

|

||||

find_package_handle_standard_args(GObject

|

||||

REQUIRED_VARS GObject_LIBRARY

|

||||

VERSION_VAR GObject_VERSION)

|

||||

10

cmake/FindGPGME.cmake

Normal file

10

cmake/FindGPGME.cmake

Normal file

@ -0,0 +1,10 @@

|

||||

include(PkgConfigWithFallbackOnConfigScript)

|

||||

find_pkg_config_with_fallback_on_config_script(GPGME

|

||||

PKG_CONFIG_NAME gpgme

|

||||

CONFIG_SCRIPT_NAME gpgme

|

||||

)

|

||||

|

||||

include(FindPackageHandleStandardArgs)

|

||||

find_package_handle_standard_args(GPGME

|

||||

REQUIRED_VARS GPGME_LIBRARY

|

||||

VERSION_VAR GPGME_VERSION)

|

||||

31

cmake/FindGTK3.cmake

Normal file

31

cmake/FindGTK3.cmake

Normal file

@ -0,0 +1,31 @@

|

||||

include(PkgConfigWithFallback)

|

||||

find_pkg_config_with_fallback(GTK3

|

||||

PKG_CONFIG_NAME gtk+-3.0

|

||||

LIB_NAMES gtk-3

|

||||

INCLUDE_NAMES gtk/gtk.h

|

||||

INCLUDE_DIR_SUFFIXES gtk-3.0 gtk-3.0/include gtk+-3.0 gtk+-3.0/include

|

||||

DEPENDS GDK3 ATK

|

||||

)

|

||||

|

||||

if(GTK3_FOUND AND NOT GTK3_VERSION)

|

||||

find_file(GTK3_VERSION_HEADER "gtk/gtkversion.h" HINTS ${GTK3_INCLUDE_DIRS})

|

||||

mark_as_advanced(GTK3_VERSION_HEADER)

|

||||

|

||||

if(GTK3_VERSION_HEADER)

|

||||

file(STRINGS "${GTK3_VERSION_HEADER}" GTK3_MAJOR_VERSION REGEX "^#define GTK_MAJOR_VERSION +\\(?([0-9]+)\\)?$")

|

||||

string(REGEX REPLACE "^#define GTK_MAJOR_VERSION \\(?([0-9]+)\\)?$" "\\1" GTK3_MAJOR_VERSION "${GTK3_MAJOR_VERSION}")

|

||||

file(STRINGS "${GTK3_VERSION_HEADER}" GTK3_MINOR_VERSION REGEX "^#define GTK_MINOR_VERSION +\\(?([0-9]+)\\)?$")

|

||||

string(REGEX REPLACE "^#define GTK_MINOR_VERSION \\(?([0-9]+)\\)?$" "\\1" GTK3_MINOR_VERSION "${GTK3_MINOR_VERSION}")

|

||||

file(STRINGS "${GTK3_VERSION_HEADER}" GTK3_MICRO_VERSION REGEX "^#define GTK_MICRO_VERSION +\\(?([0-9]+)\\)?$")

|

||||

string(REGEX REPLACE "^#define GTK_MICRO_VERSION \\(?([0-9]+)\\)?$" "\\1" GTK3_MICRO_VERSION "${GTK3_MICRO_VERSION}")

|

||||

set(GTK3_VERSION "${GTK3_MAJOR_VERSION}.${GTK3_MINOR_VERSION}.${GTK3_MICRO_VERSION}")

|

||||

unset(GTK3_MAJOR_VERSION)

|

||||

unset(GTK3_MINOR_VERSION)

|

||||

unset(GTK3_MICRO_VERSION)

|

||||

endif()

|

||||

endif()

|

||||

|

||||

include(FindPackageHandleStandardArgs)

|

||||

find_package_handle_standard_args(GTK3

|

||||

REQUIRED_VARS GTK3_LIBRARY

|

||||

VERSION_VAR GTK3_VERSION)

|

||||

13

cmake/FindGee.cmake

Normal file

13

cmake/FindGee.cmake

Normal file

@ -0,0 +1,13 @@

|

||||

include(PkgConfigWithFallback)

|

||||

find_pkg_config_with_fallback(Gee

|

||||

PKG_CONFIG_NAME gee-0.8

|

||||

LIB_NAMES gee-0.8

|

||||

INCLUDE_NAMES gee.h

|

||||

INCLUDE_DIR_SUFFIXES gee-0.8 gee-0.8/include

|

||||

DEPENDS GObject

|

||||

)

|

||||

|

||||

include(FindPackageHandleStandardArgs)

|

||||

find_package_handle_standard_args(Gee

|

||||

REQUIRED_VARS Gee_LIBRARY

|

||||

VERSION_VAR Gee_VERSION)

|

||||

20

cmake/FindGettext.cmake

Normal file

20

cmake/FindGettext.cmake

Normal file

@ -0,0 +1,20 @@

|

||||

find_program(XGETTEXT_EXECUTABLE xgettext)

|

||||

find_program(MSGMERGE_EXECUTABLE msgmerge)

|

||||

find_program(MSGFMT_EXECUTABLE msgfmt)

|

||||

find_program(MSGCAT_EXECUTABLE msgcat)

|

||||

mark_as_advanced(XGETTEXT_EXECUTABLE MSGMERGE_EXECUTABLE MSGFMT_EXECUTABLE MSGCAT_EXECUTABLE)

|

||||

|

||||

if(XGETTEXT_EXECUTABLE)

|

||||

execute_process(COMMAND ${XGETTEXT_EXECUTABLE} "--version"

|

||||

OUTPUT_VARIABLE Gettext_VERSION

|

||||

OUTPUT_STRIP_TRAILING_WHITESPACE)

|

||||

string(REGEX REPLACE "xgettext \\(GNU gettext-tools\\) ([0-9\\.]*).*" "\\1" Gettext_VERSION "${Gettext_VERSION}")

|

||||

endif(XGETTEXT_EXECUTABLE)

|

||||

|

||||

include(FindPackageHandleStandardArgs)

|

||||

find_package_handle_standard_args(Gettext

|

||||

FOUND_VAR Gettext_FOUND

|

||||

REQUIRED_VARS XGETTEXT_EXECUTABLE MSGMERGE_EXECUTABLE MSGFMT_EXECUTABLE MSGCAT_EXECUTABLE

|

||||

VERSION_VAR Gettext_VERSION)

|

||||

|

||||

set(GETTEXT_USE_FILE "${CMAKE_CURRENT_LIST_DIR}/UseGettext.cmake")

|

||||

13

cmake/FindGnuTLS.cmake

Normal file

13

cmake/FindGnuTLS.cmake

Normal file

@ -0,0 +1,13 @@

|

||||

include(PkgConfigWithFallback)

|

||||

find_pkg_config_with_fallback(GnuTLS

|

||||

PKG_CONFIG_NAME gnutls

|

||||

LIB_NAMES gnutls

|

||||

INCLUDE_NAMES gnutls/gnutls.h

|

||||

INCLUDE_DIR_SUFFIXES gnutls gnutls/include

|

||||

DEPENDS GLib

|

||||

)

|

||||

|

||||

include(FindPackageHandleStandardArgs)

|

||||

find_package_handle_standard_args(GnuTLS

|

||||

REQUIRED_VARS GnuTLS_LIBRARY

|

||||

VERSION_VAR GnuTLS_VERSION)

|

||||

14

cmake/FindGspell.cmake

Normal file

14

cmake/FindGspell.cmake

Normal file

@ -0,0 +1,14 @@

|

||||

include(PkgConfigWithFallback)

|

||||

find_pkg_config_with_fallback(Gspell

|

||||

PKG_CONFIG_NAME gspell-1

|

||||

LIB_NAMES gspell-1

|

||||

INCLUDE_NAMES gspell.h

|

||||

INCLUDE_DIR_SUFFIXES gspell-1 gspell-1/gspell

|

||||

DEPENDS Gtk

|

||||

)

|

||||

|

||||

include(FindPackageHandleStandardArgs)

|

||||

find_package_handle_standard_args(Gspell

|

||||

REQUIRED_VARS Gspell_LIBRARY

|

||||

VERSION_VAR Gspell_VERSION)

|

||||

|

||||

12

cmake/FindGst.cmake

Normal file

12

cmake/FindGst.cmake

Normal file

@ -0,0 +1,12 @@

|

||||

include(PkgConfigWithFallback)

|

||||

find_pkg_config_with_fallback(Gst

|

||||

PKG_CONFIG_NAME gstreamer-1.0

|

||||

LIB_NAMES gstreamer-1.0

|

||||

INCLUDE_NAMES gst/gst.h

|

||||

INCLUDE_DIR_SUFFIXES gstreamer-1.0 gstreamer-1.0/include

|

||||

)

|

||||

|

||||

include(FindPackageHandleStandardArgs)

|

||||

find_package_handle_standard_args(Gst

|

||||

REQUIRED_VARS Gst_LIBRARY

|

||||

VERSION_VAR Gst_VERSION)

|

||||

14

cmake/FindGstApp.cmake

Normal file

14

cmake/FindGstApp.cmake

Normal file

@ -0,0 +1,14 @@

|

||||

include(PkgConfigWithFallback)

|

||||

find_pkg_config_with_fallback(GstApp

|

||||

PKG_CONFIG_NAME gstreamer-app-1.0

|

||||

LIB_NAMES gstapp

|

||||

LIB_DIR_HINTS gstreamer-1.0

|

||||

INCLUDE_NAMES gst/app/app.h

|

||||

INCLUDE_DIR_SUFFIXES gstreamer-1.0 gstreamer-1.0/include gstreamer-app-1.0 gstreamer-app-1.0/include

|

||||

DEPENDS Gst

|

||||

)

|

||||

|

||||

include(FindPackageHandleStandardArgs)

|

||||

find_package_handle_standard_args(GstApp

|

||||

REQUIRED_VARS GstApp_LIBRARY

|

||||

VERSION_VAR GstApp_VERSION)

|

||||

14

cmake/FindGstAudio.cmake

Normal file

14

cmake/FindGstAudio.cmake

Normal file

@ -0,0 +1,14 @@

|

||||

include(PkgConfigWithFallback)

|

||||

find_pkg_config_with_fallback(GstAudio

|

||||

PKG_CONFIG_NAME gstreamer-audio-1.0

|

||||

LIB_NAMES gstaudio

|

||||

LIB_DIR_HINTS gstreamer-1.0

|

||||

INCLUDE_NAMES gst/audio/audio.h

|

||||

INCLUDE_DIR_SUFFIXES gstreamer-1.0 gstreamer-1.0/include gstreamer-audio-1.0 gstreamer-audio-1.0/include

|

||||

DEPENDS Gst

|

||||

)

|

||||

|

||||

include(FindPackageHandleStandardArgs)

|

||||

find_package_handle_standard_args(GstAudio

|

||||

REQUIRED_VARS GstAudio_LIBRARY

|

||||

VERSION_VAR GstAudio_VERSION)

|

||||

19

cmake/FindGstRtp.cmake

Normal file

19

cmake/FindGstRtp.cmake

Normal file

@ -0,0 +1,19 @@

|

||||

include(PkgConfigWithFallback)

|

||||

find_pkg_config_with_fallback(GstRtp

|

||||

PKG_CONFIG_NAME gstreamer-rtp-1.0

|

||||

LIB_NAMES gstrtp

|

||||

LIB_DIR_HINTS gstreamer-1.0

|

||||

INCLUDE_NAMES gst/rtp/rtp.h

|

||||

INCLUDE_DIR_SUFFIXES gstreamer-1.0 gstreamer-1.0/include gstreamer-rtp-1.0 gstreamer-rtp-1.0/include

|

||||

DEPENDS Gst

|

||||

)

|

||||

|

||||

if(GstRtp_FOUND AND NOT GstRtp_VERSION)

|

||||

find_package(Gst)

|

||||

set(GstRtp_VERSION ${Gst_VERSION})

|

||||

endif()

|

||||

|

||||

include(FindPackageHandleStandardArgs)

|

||||

find_package_handle_standard_args(GstRtp

|

||||

REQUIRED_VARS GstRtp_LIBRARY

|

||||

VERSION_VAR GstRtp_VERSION)

|

||||

14

cmake/FindGstVideo.cmake

Normal file

14

cmake/FindGstVideo.cmake

Normal file

@ -0,0 +1,14 @@

|

||||

include(PkgConfigWithFallback)

|

||||

find_pkg_config_with_fallback(GstVideo

|

||||

PKG_CONFIG_NAME gstreamer-video-1.0

|

||||

LIB_NAMES gstvideo

|

||||

LIB_DIR_HINTS gstreamer-1.0

|

||||

INCLUDE_NAMES gst/video/video.h

|

||||

INCLUDE_DIR_SUFFIXES gstreamer-1.0 gstreamer-1.0/include gstreamer-video-1.0 gstreamer-video-1.0/include

|

||||

DEPENDS Gst

|

||||

)

|

||||

|

||||

include(FindPackageHandleStandardArgs)

|

||||

find_package_handle_standard_args(GstVideo

|

||||

REQUIRED_VARS GstVideo_LIBRARY

|

||||

VERSION_VAR GstVideo_VERSION)

|

||||

11

cmake/FindICU.cmake

Normal file

11

cmake/FindICU.cmake

Normal file

@ -0,0 +1,11 @@

|

||||

include(PkgConfigWithFallback)

|

||||

find_pkg_config_with_fallback(ICU

|

||||

PKG_CONFIG_NAME icu-uc

|

||||

LIB_NAMES icuuc icudata

|

||||

INCLUDE_NAMES unicode/umachine.h

|

||||

)

|

||||

|

||||

include(FindPackageHandleStandardArgs)

|

||||

find_package_handle_standard_args(ICU

|

||||

REQUIRED_VARS ICU_LIBRARY

|

||||

VERSION_VAR ICU_VERSION)

|

||||

13

cmake/FindNice.cmake

Normal file

13

cmake/FindNice.cmake

Normal file

@ -0,0 +1,13 @@

|

||||

include(PkgConfigWithFallback)

|

||||

find_pkg_config_with_fallback(Nice

|

||||

PKG_CONFIG_NAME nice

|

||||

LIB_NAMES nice

|

||||

INCLUDE_NAMES nice.h

|

||||

INCLUDE_DIR_SUFFIXES nice nice/include

|

||||

DEPENDS GIO

|

||||

)

|

||||

|

||||

include(FindPackageHandleStandardArgs)

|

||||

find_package_handle_standard_args(Nice

|

||||

REQUIRED_VARS Nice_LIBRARY

|

||||

VERSION_VAR Nice_VERSION)

|

||||

33

cmake/FindPango.cmake

Normal file

33

cmake/FindPango.cmake

Normal file

@ -0,0 +1,33 @@

|

||||

include(PkgConfigWithFallback)

|

||||

find_pkg_config_with_fallback(Pango

|

||||

PKG_CONFIG_NAME pango

|

||||

LIB_NAMES pango-1.0

|

||||

INCLUDE_NAMES pango/pango.h

|

||||

INCLUDE_DIR_SUFFIXES pango-1.0 pango-1.0/include

|

||||

DEPENDS GObject

|

||||

)

|

||||

|

||||

if(Pango_FOUND AND NOT Pango_VERSION)

|

||||

find_file(Pango_FEATURES_HEADER "pango/pango-features.h" HINTS ${Pango_INCLUDE_DIRS})

|

||||

mark_as_advanced(Pango_FEATURES_HEADER)

|

||||

|

||||

if(Pango_FEATURES_HEADER)

|

||||

file(STRINGS "${Pango_FEATURES_HEADER}" Pango_MAJOR_VERSION REGEX "^#define PANGO_VERSION_MAJOR +\\(?([0-9]+)\\)?$")

|

||||

string(REGEX REPLACE "^#define PANGO_VERSION_MAJOR \\(?([0-9]+)\\)?$" "\\1" Pango_MAJOR_VERSION "${Pango_MAJOR_VERSION}")

|

||||

file(STRINGS "${Pango_FEATURES_HEADER}" Pango_MINOR_VERSION REGEX "^#define PANGO_VERSION_MINOR +\\(?([0-9]+)\\)?$")

|

||||

string(REGEX REPLACE "^#define PANGO_VERSION_MINOR \\(?([0-9]+)\\)?$" "\\1" Pango_MINOR_VERSION "${Pango_MINOR_VERSION}")

|

||||

file(STRINGS "${Pango_FEATURES_HEADER}" Pango_MICRO_VERSION REGEX "^#define PANGO_VERSION_MICRO +\\(?([0-9]+)\\)?$")

|

||||

string(REGEX REPLACE "^#define PANGO_VERSION_MICRO \\(?([0-9]+)\\)?$" "\\1" Pango_MICRO_VERSION "${Pango_MICRO_VERSION}")

|

||||

set(Pango_VERSION "${Pango_MAJOR_VERSION}.${Pango_MINOR_VERSION}.${Pango_MICRO_VERSION}")

|

||||

unset(Pango_MAJOR_VERSION)

|

||||

unset(Pango_MINOR_VERSION)

|

||||

unset(Pango_MICRO_VERSION)

|

||||

endif()

|

||||

endif()

|

||||

|

||||

include(FindPackageHandleStandardArgs)

|

||||

find_package_handle_standard_args(Pango

|

||||

FOUND_VAR Pango_FOUND

|

||||

REQUIRED_VARS Pango_LIBRARY

|

||||

VERSION_VAR Pango_VERSION

|

||||

)

|

||||

11

cmake/FindQrencode.cmake

Normal file